the sound of violent silence

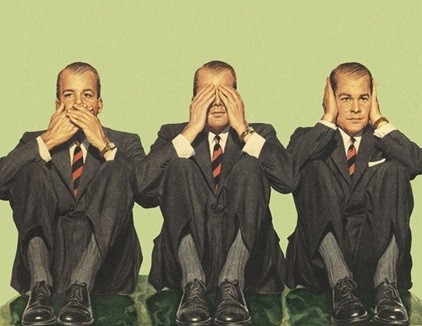

Hello darkness, my old friend

I’ve come to talk with you again

Because a vision softly creeping

Left its seeds while I was sleeping

And the vision that was planted in my brain

Still remains

Within the sound of silence

Simon & Garfunkel

What if we can no longer hear the machines?

I thought about this the other day when someone suggested over the past years people have become more aware of algorithms and their effect. I answered that there was a difference between awareness and understanding. Anyway. Technology has certainly been impacting society, and the individuals within, for centuries, but this particular generation of technology and machines seems to be having a deeper impact on both society and the individuals within.

Which leads me what I believe is happening.

In the past we always had some warning, i.e., the ominous sounds of the machines bearing down upon us or pounding on our ears. The new machines are silent. No warnings. Divested of sound our anxiety has no source. This means that history and the future simultaneously become unseen, unpredictable, and unfolding with no forewarning as to what is to come. This would suggest without warning we fall into the wretched hollow in between stimulus and response. That hollow matters because, typically, we select a from several possibilities which vary contextually from place to place and time to time, but bereft of some important cues which would not only help identify not only the appropriate response but to help identify the parameters of the place and time, we skate on the superficial surface of algorithms feeding our worst instincts and tendencies, uhm, not our best stuff to lean in on.

Which leads me to the superficial surface of human instincts.

Which leads me to the superficial surface of human instincts.

The reality is that technology can be quite human-like, but never quite human. Technology, at best, simply mimics humans and human societies. This is dangerous if we become dependent upon technology to help us define how to act as humans and what a human society should look like. Unlike ants and bees and any other non-human animal (which acts off genetics codes) we attempt to find cues for the rules of a human society through the uncomfortable participation in connection with other humans. This has been true for generations. Writing letters, telephoning, emails, and now things like Facebook and Instagram simply augment human interaction. In fact, one could guess that all those types of communication probably only work as well as they do because they augment all the ordinary interaction that goes on at the same time. The reality is letter writing, telephoning, even emailing, is low depth communication. High depth communication includes smelling, touching, tasting, and enough visual definition to be able to see facial expressions and body language. That’s important when thinking about stealthy algorithms because we should always remind ourselves that societies are formed and maintained through people physically interacting with each other. That doesn’t mean that as time goes by, and the physical connections have become established within the societal infrastructure, that an increase in proportion of interactions can take place without face-to-face human interaction. But. As someone said about the internet: people don’t see each other, but only what the software shows them. This creates an ambiguity between what is human and what is just human-like. What are actual images of people and what are constructed images of people. And that is important because if you believe that it is people who form societies and it is through their complex interactions within which the basis of society is formed then whether you are dealing with constructed images or reality makes a significant difference.

Which leads me to patients and impatience. Algorithms are infinitely patient while humans are finitely impatient. Years ago, Jaron Lanier warned us that algorithms didn’t attempt to change us immediately but rather through relentless nudging fractions of a percentage at a time. Algorithms patiently nudge us slowly bending the arc of our beliefs and attitudes. They prowl within human impatience seeking opportunities to not just bend the arc, but to shift one’s reality. It is within this soundless subtlety where the true danger lurks unseen, unheard and unnoticed. How dangerous and how unnoticed? I still have conversations with people in the advertising business, a business whose premised structure is nudging people’s beliefs and attitudes to affect behavior, that the 2016 election voting was affected by Russia and algorithms and Bots. I often wonder if people in advertising with true experience of how people’s minds can be affected don’t understand how algorithms work or they can affect people, how the general public can grasp the concept. Its subtlety is vast and relentless and patient. As Noah Smith just noted in a piece on TikTok and Chinese ownership: “overall, the pattern is unmistakable — every single topic that the CCP doesn’t want people to talk about is getting suppressed on TikTok.” And therein lies the subtlety. Its not that algorithms just highlight content; they simultaneously suppress content thereby implying less importance (or even less true). Yeah. Salience matters (for good and bad stuff).

Which leads me to the silence in equilibrium.

Many systems have equilibrium points, but there is a wide variety of types of equilibrium and, in fact, most systems have multiple equilibrium points. Uhm. But what if we cannot ever see them, or sense them, or, worse, be encouraged to believe one is there when it is not? Its fairly easy to sense the possible dissonance one would feel in life if they constantly embraced a false equilibrium day after day. That person would constantly be at odds with, well, reality. That is, in my mind, the outcome of the sounds of violent silence. Ponder.

Leave a Comment